Section: New Results

Cognitive Assessment Using Gesture Recognition

Participants : Farhood Negin, Michal Koperski, Philippe Robert, François Brémond, Pau Rodrigez, Jeremy Bourgeois.

keywords: Human computer interaction, Computer assisted diagnosis, Cybercare industry applications, Medical services, Patient monitoring, Pattern recognition.

The Praxis test and clinical diagnosis

Praxis test is a gesture-based diagnostic test which has been accepted as diagnostically indicative of cortical pathologies such as Alzheimer's disease. Despite being simple, this test is oftentimes skipped by the clinicians. In this study, we proposed a novel framework to investigate the potential of static and dynamic upper-body gestures based on the Praxis test and their potential in a medical framework to automatize the test procedures for computer-assisted cognitive assessment of older adults.

In order to carry out gesture recognition as well as correctness assessment of the performances we have recollected a novel challenging RGB-D gesture video dataset (https://team.inria.fr/stars/praxis-dataset/) recorded by Kinect v2, which contains 29 specific gestures suggested by clinicians and recorded from both experts and patients performing the gesture set. Moreover, we propose a framework to learn the dynamics of upper-body gestures, considering the videos as sequences of short-term clips of gestures. Our approach first uses body part detection to extract image patches surrounding the hands and then, by means of a fine-tuned convolutional neural network (CNN) [87] model, it learns deep hand features which are then linked to a long short-term memory (LSTM) [79] to capture the temporal dependencies between video frames.

We report the results of four developed methods using different modalities. The experiments show effectiveness of our deep learning based approach in gesture recognition and performance assessment tasks. Satisfaction of clinicians from the assessment reports indicates the impact of our proposed framework corresponding to the diagnosis.

Proposed Method

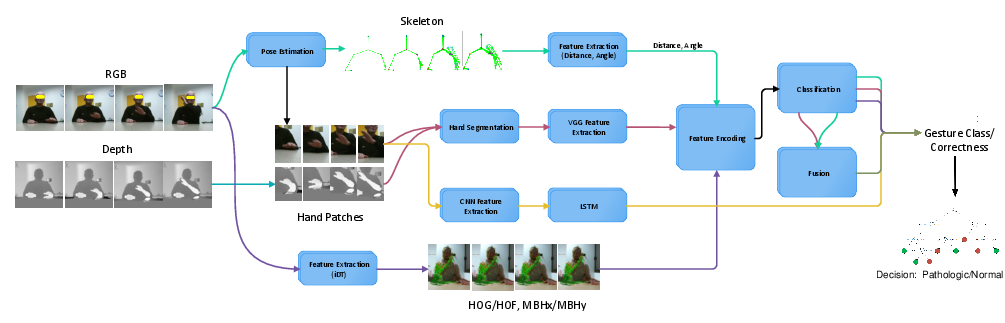

Four methods have been applied to evaluate the dataset (Figure 26). Each path (indicated with different colors) learns its representation and performs gesture recognition independently given RGB-D stream and pose information as input.

|

Skeleton Based Method: Similar to [132] the joint angle and distance features are used to define global appearance of the poses. Prior to the classification (different from [132]), a temporal window-based method is employed to capture temporal dependencies among consecutive frames and to differentiate pose instances by notion of temporal proximity.

Multi-modal Fusion: The skeleton feature captures only global appearance of a person, while deep VGG features [117] extracted from RGB video stream acquire additional information about hand shape and dynamics of the hand motion which is important for discriminating gestures, specially the ones with similar poses. Due to sub-optimal performance of immediate concatenation of the high-dimensional features, a late fusion scheme for class probabilities is adopted.

Local Descriptor Based Method: Similar to action recognition techniques which use improved dense trajectories [128], a feature extraction step is followed by a Fisher vector-based encoding scheme.

Deep Learning based Method: Influenced by recent advancements in representation learning methods, a convolutional neural network based representation of hands is coupled with a LSTM to effectively learn both temporal dependencies and dynamics of the hand gestures. In order to make decisions about condition of a subject (normal vs pathologic) and perform a diagnostic prediction, a decision tree is trained by taking output of gesture recognition task into account.

It should be noticed that for all the developed methods we assumed that the subjects are in a sitting position in front of the camera where only their upper-body is visible. We also assume that the gestures are already localized and the input to the system is short-term clipped videos.

|

In this work we made a stride towards non-invasive detection of cognitive disorders by means of our novel dataset and an effective deep learning pipeline that takes into account temporal variations, achieving average accuracy on classifying gestures for diagnosis. The performance measurements of the applied algorithms are given in Figure 27.

We proposed a computer-assisted solution to undergo evaluation of automatic diagnosis process with help of computer vision. The evaluations of the system can be delivered to the clinicians for further assessment in decision making processes. We have collected a unique dataset from 60 subjects targeting analysis and recognition of the challenging gestures included in the Praxis test. To better evaluate the dataset we have applied different baseline methods using different modalities. Using CNN+LSTM we have shown strong evidence that complex near range gesture and upper body recognition tasks have potential to be employed in medical scenarios. In order to be practically useful, the system will be evaluated with a larger population.